Managing Business Processes with Artificial Intelligence: From Automation to Autonomy

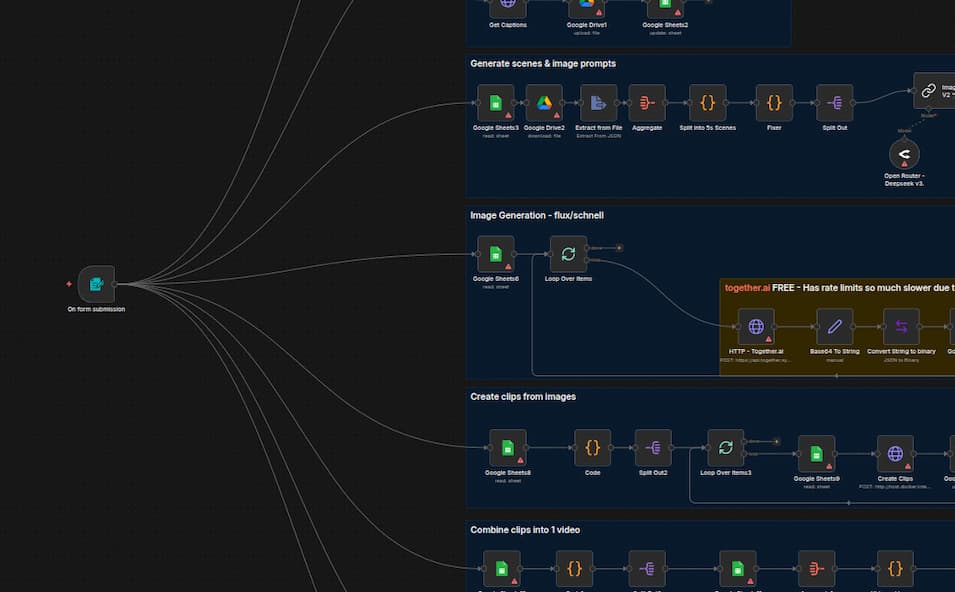

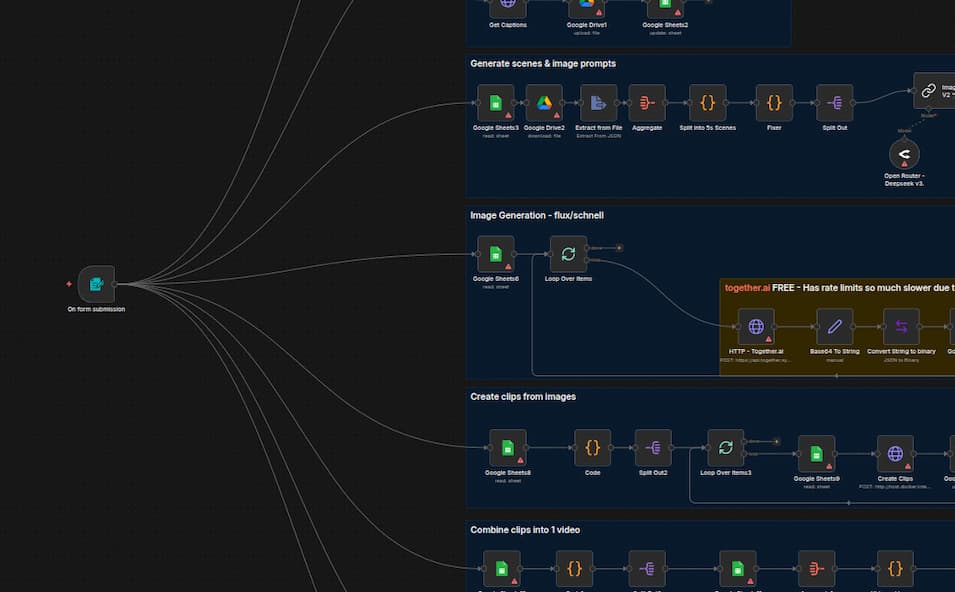

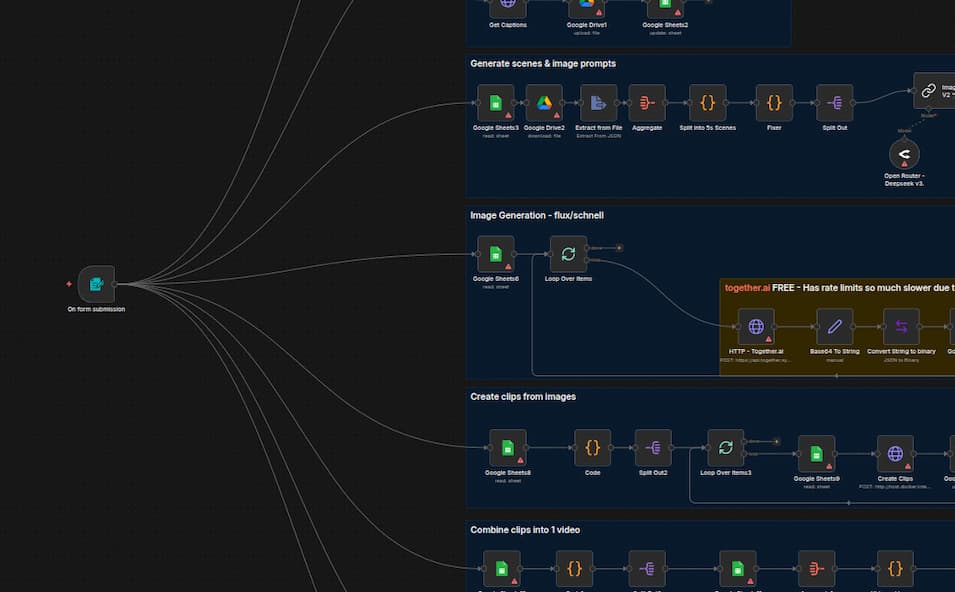

Business process automation with artificial intelligence goes beyond the traditional RPA approach. Today, AI agents can independently plan, execute, a...

We engineer enterprise-grade technology solutions that don't just meet expectations—they redefine industries.

Join European companies who trust us to power their digital future.

Most loved products by our customers

Limited time offers - Don't miss out!

🔥 Flash Sale Ends In: 23:59:59

Use code SAVE10 for extra 10% off

Fresh products just landed in store

We don't just build software—we engineer competitive advantages. Each solution is designed to maximize ROI and accelerate your market leadership.

Enterprise-grade Magento 2 solutions with advanced SEO optimization, custom extensions, and seamless integrations that drive 300% revenue growth

UiPath or selenium-powered intelligent automation that eliminates 80% of repetitive tasks, reducing operational costs by up to €500K annually

Custom AI solutions and autonomous business systems that increase decision-making speed by 10x and unlock new revenue streams

Complete ERP transformation with real-time data synchronization across 200+ modules, achieving 99.9% system reliability

Full-stack solutions using cutting-edge frameworks, delivering scalable applications 60% faster than industry standard

AR/VR experiences, IoT solutions, and digital twins that position your business 5 years ahead of competition

Need a custom solution? We build exactly what your business needs.

Schedule Strategy CallDon't just take our word for it. See how we've transformed businesses across Europe.

Join Europe's most innovative companies and start your transformation today.

Get Your Free Strategy Session"Alcyone successfully merged several external systems into a unified API which serves us to communicate with AI agents. The ROI was visible within 2 months."

"Our Magento 2 store built by Alcyone made significant impact in the first year. Their attention to detail and technology expertise is exceptional."

"The RPA solution saved us 3500 hours annually and paid for itself in 2 months. Alcyone's team is professional, responsive, and truly understands business needs."

We're not just developers — we're strategic partners committed to your market dominance. Every solution is engineered with precision, deployed with excellence, and backed by unwavering support.

We stand behind our work with a 100% satisfaction guarantee and ongoing support.

We leverage cutting-edge technologies to build scalable, secure, and future-proof solutions that give you a competitive edge.

Book your free strategy session and discover how we can accelerate your growth.

⚡ Limited slots available this month — Act now!

Schedule a consultation with our senior architects. We'll analyze your needs, propose solutions, and show you exactly how we can help you dominate your market.

🎁 Worth €2,000 — Absolutely Free for Qualified Projects

Trusted by leading companies across Europe

Stay updated with the latest trends in technology, AI, and digital transformation

Business process automation with artificial intelligence goes beyond the traditional RPA approach. Today, AI agents can independently plan, execute, a...

Klasična avtomatizacija ne zadostuje več. Spoznajte orodja nove generacije – od N8N in CrewAI do RAG sistemov – ki omogočajo inteligentno orkestriranj...

Running multiple Docker applications on a single server quickly becomes chaotic without proper organization. This guide covers practical patterns for...